In fact, I first wrote a paper on currency unions and trade as my class paper when I was a grad student in Alan Taylor's excellent course in Open-Economy Macro/History. A doubling of trade due to a currency union looked like a classic case of "endogeneity" to me. Currency Unions are like marriages, they don't form or break apart for no reason. They are as non-random a treatment as you'll find. In addition, we know from Klein and Shambaugh's excellent work that direct pegs seem to have a much smaller impact on trade, and that indirect pegs -- which probably are formed randomly -- have no effect at all. Thus we know that the effect of currency unions doesn't operate via exchange rate volatility, the most plausible channel. Also, the magnitude of the effects bandied about are much too large to be believed. Consider that Doug Irwin finds that the Smoot-Hawley tariff reduced trade by 4-8%. How plausible is it that currency unions have an impact at least 12 times larger? Or the Euro six times larger? It isn't. A 5% increase in trade still implies an increase of tens of billions of dollars for the EU -- probably still too large. My intuition is that something on the order of .05-.5% would be plausible, but too small to estimate.

Since I was skeptical, I fired up Stata. It then took me approximately 30 minutes to make the magical -- yes, magical -- effect of currency unions on trade disappear, at least for one major sub-sample -- former UK colonies. It took only a bit more time to notice that the results were also driven by wars and missing data, but it was still a quick paper.

In any case, to his credit, Reuven Glick responds, making many thoughtful points. Here he is:

My co-author, Andy Rose, already responded to the comments on your blogsite about our recent EER paper. I'd like to offer my additional one-shot responses to your comments of March 24, 2017 and your follow-up on April 24, 2017.

•As Andy noted, we find significant effects for many individual currency unions (CUs), not just for those involving the British pound and former UK colonies. Yes, the magnitude of the trade effects varies across these unions, but that’s not surprising. So the “magical” currency union effect doesn’t disappear, even when attributing the UK-related effects to something other than the dissolution of pound unions.

There is a pattern in this literature. You guys find a large trade impact result, and then somebody points out that it isn't robust -- in short, that the effect does disappear. For example, Bomberger (no paper on-line; but Rose helpfully posted his referee report here) showed that your earlier results go away for the colonial sample with the inclusion of colony-specific time trends, while Berger and Nitsch have already showed that a simple time trend kills the Euro impact on trade. Each time you guys then come back with a larger data set, and show that the impact is robust. However, the lessons from the literature curiously do not get internalized; the controls which reversed your previous results forgotten.

Since you mention the UK, let's have a look at a simple plot of trade between the UK and its former colonies vs. the UK and countries which it had currency unions. What you see below are the dummies plotted in a gravity regression for the UK with its former colonies vs. countries that ever had a UK currency union. Note that the UK had something like 60+ colonies while there are only 20-something countries which had currency unions, only one of them a non-colony. What it shows is that the evolution of trade between the UK and its former colonies is quite similar to the evolution of trade between the UK and countries which shared a CU with. The blue bars (axis at left), then show the dates of UK currency union dissolutions, mostly during the Sterling Crisis of the 1960s. What one sees is that there was a gradual decaying of trade for both UK colonies and countries ever in a currency union.

So, yes, including a time trend control does in fact make the "magical" result go away. In my earlier paper, I got an impact of 108% for UK Currency Unions with no time trend control, but -3.8% (not statistically significant) when I include a simple UK colony*year trend control. That's a fairly stark difference from the introduction of a mild control. And, in your earlier paper (Glick and Rose, 2002), the UK colonial sample comprises one-fifth of the CU changes with time series variation in the data. In addition, the disappearance of the CU effect for UK CUs raises the question of how robust it is for other subsamples, where CU switches are also not exogenous -- think India-Pakistan. It turns out that almost none of it is robust. Yet, you do nothing to control for the impact of decolonization in your most recent work, nor do you ask what might be driving your results in other settings.

The thing is, it isn't just that you didn't see my paper (which I emailed to you). Your coauthor clearly refereed Bomberger's paper, which looks to me like a case of academic gatekeeping. I love academia, damnit! Bomberger deserved better than what he got. I want the profession to produce results people can believe in. With all due respect, you guys are repeat offenders at this stage. If this was your first offense, I would not have been so aggressive. And yes, I'll confess to having been put off by the fact that you thanked me, even though I did not comment on the substance of your paper, but did not cite me.

•We tried to control for as many things as possible by including the usual variables, such as former colonial status, as well as appropriate fixed effects, such as country-year and pair effects. Yes, one can always think of something that was left out. For example, if you’re concerned about the effects of wars and conflicts, check out my paper with Alan Taylor (“Collateral Damage: Trade Disruption And The Economic Impact Of War,” RESTAT 2010), where we find that that currency unions still had sizable trade effects, even after controlling for wars (see Table 2). Granted the data set only goes up to 1997 in that paper, and we don’t address the effects of the end of the Cold War, the dissolution of the USSR, and the Balkan wars. That might make an interesting project for one of your graduate students to pursue.

OK, sure. I would like to believe that figuring out what was driving the CU effect on trade was incredibly difficult to figure out, and that I was able to solve the puzzle only via genius, but the reality is that others (such as Bomberger; others also mentioned decolonization as a factor too, or reversed earlier versions of the CU effect, and let's not forget my undergraduates), also had prescient critiques. I don't get the sense that you guys really searched very hard for alternative explanations (India and Pakistan hate each other!) for the enormous impacts you were getting. It looks to me more like you included standard gravity controls and then weren't overly curious about what was driving your results. As my earlier paper notes, it's actually quite difficult to find individual examples of currency unions in which trade fell/increased only right after dissolution/formation, without having a rather obvious third factor driving the results (decolonization, communist takeover, the EU). Even in your recent paper, you find strong "pre-treatment trends" -- that trade is declining long before a CU dissolution. In modern Applied Micro, the existence of strong pre-treatment trends is more evidence that the treatment is not random. It should have been a red flag.

•I agree that it is important to disentangle the effects of EMU from those of other forms of European trade integration, such as EU membership. In our EER paper we included an aggregated measure that captures the average effect of all regional trade agreements (RTAs). Of course, aggregating all such arrangements together does not allow for possible heterogeneity across different RTAs. To see if this matters, see a recent paper of mine (“Currency Unions and Regional Trade Agreements: EMU and EU Effects on Trade,” Comparative Economic Studies, 2017) where I separate out the effects of EU and other RTAs so as to explicitly analyze how membership in EU affects the trade effects of EMU. I also look at whether there are differences in the effects between the older and newer members of the EU and EMU, something that should be of interest to your students interested in East European transition economies. I find that the EMU and EU each significantly boosted exports, and allowing separate EU effects doesn't "kill" the EMU effect. Most importantly, even after controlling for the EU effects, EMU expanded European trade by 40% for the original members. The newer members have experienced even higher trade as a result of joining the EU, but more time and a longer data set is necessary to see the effects of their joining EMU.

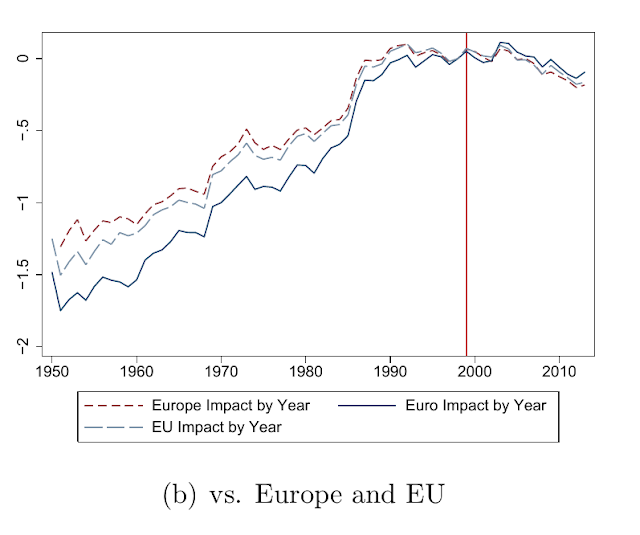

OK, but a 40% increase that you find in your 2017 paper is already 25% smaller than the 50% you guys argue for in your 2016 paper. At a minimum, the result seems sensitive to specification. I'm not convinced that a single 0/1 RTA dummy for all free trade agreements is remotely enough to control for the entire history of European integration, from the Coal & Steel Community, to the ERM and EU. Also, 0/1 dummies imply that the impact happens fully by year 1, and ignores dynamics -- part of my earlier critique. If two countries go from autarky to free trade, the adjustment should take more than just one year. On a quick look at your 2017 paper, I'd say: I'd like to see you control for an Ever EU*year interactive fixed effect. If you do that, you'll kill the EMU dummy much like the EMU did to the Greek economy. I'd also like to see you plot the treatment effects over time, as I did in my previous post. (Here it is again:)

Once again, I don't know how you can look at this above and cling to a 40 or 50% impact of the EMU. The pre-EMU increase in trade mostly happens by 1992. This increase happens for all EU countries, and indeed all Western European countries. Those that eventually joined the Euro even have trade increasing faster than EU or all Western European countries even before the EMU. If you ignore this pre-trend, you could get an impact of several percent at most for the difference between the Euro vs. EU/Europe in the graph above by 2013, but that difference won't be statistically significant.

•Andy and I agree that the endogeneity of CUs could be a concern, and suggested that employing matching estimation might be one way to approach the issue. Perhaps this would be another good assignment for one of your graduate students, who are interested in doing more than “seek and destroy.”

I agree with this. The point shouldn't be to destroy, but to provide the best estimate possible. I would be more than happy to join forces with you guys and one of my graduate students in writing a proper mea culpa, to nip this literature in the bud. It certainly would take a lot of intellectual integrity to do this, and you should be commended for it if you would like to do this. (Although, I'd note that this your results have already been reversed.) Your new paper has a larger data set, but is sensitive to the same concerns already extant in the literature.

•Lastly, our recent EER paper has over 40 references; sorry we didn’t include you. Please note that your citation date for our paper should be corrected; the paper was published in 2016, not 2017.

OK. You also should have added in a citation for Bomberger's unpublished manuscript, who killed your results on a key subsample.

One question -- now that you guys have my earlier paper in hand, and know that a time trend kills the UK CU effect, for example, and know that missing data and wars are driving the other result, and now that you've seen my figure above showing that the EMU increased trade by at most a couple percent, do you still believe that currency unions double trade, on average? Or, which part of my earlier paper did you not find convincing? And which parts were convincing?